As Meta advances efforts to embed artificial intelligence in humanoid robots, the global tech industry is in a full-blown race to harness the power of AI. Tech leaders increasingly view Generative AI not just as a tool, but as a transformative force with the potential to address humanity’s most pressing challenges—from climate modelling to healthcare diagnostics. Yet, as AI’s promise grows, so too do warnings about its existential risks. Historian Yuval Noah Harari, in his 2025 book Nexus, cautions that AI could “destroy human civilisation.” Others, including pioneers like Geoffrey Hinton and Yoshua Bengio, and business leaders like Elon Musk, have echoed similar fears over Generative AI’s unregulated proliferation.

Yet the dominant view in boardrooms is one of optimism. A 2025 PwC survey finds that 73% of business leaders expect AI to confer a significant competitive advantage, while 79% anticipate improvements in productivity, revenue, and cost efficiency, according to Deloitte. McKinsey reports that by 2024, 78% of global firms had adopted AI in some form. The AI market itself, valued at $279 billion in 2024, is projected to grow at a compound annual growth rate of 35.9% to reach $1.8 trillion by 2030.

READ I Women-led Kerala model can bolster India’s climate resilience

Generative AI: The breakout catalyst

The arrival of generative AI tools—such as ChatGPT—has been a watershed. These systems, built on large language models like GPT-4 and its successors, are now embedded in finance, education, agriculture, logistics, retail, and media. Analysts estimate that generative AI alone could contribute $15.7 trillion to global GDP by 2030. As of mid-2025, ChatGPT has between 800 million and 1 billion weekly active users, a testament to its integration into both professional workflows and personal routines.

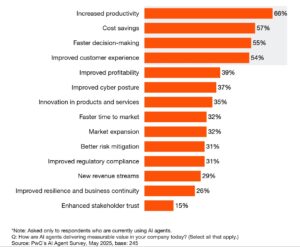

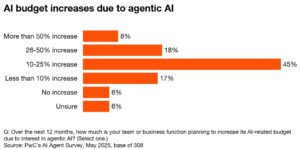

How AI agents are already delivering value

Workers, too, are adapting. Microsoft’s 2025 Work Trend Index reports that 86% of employees use AI tools to find the right information, 80% to summarise meetings, and 77% to structure their daily tasks. A global survey by the Adecco Group suggests that AI saves workers about one hour each day—particularly those in data-intensive fields like computer science and mathematics. However, the time-saving benefits are less pronounced in professions such as law, education, and social services, according to the Federal Reserve Bank of St. Louis (February 2025).

HP’s 2024 Work Relationship Index notes that 60% of AI users report an improved work-life balance. But this optimism is tempered by research from Goldman Sachs, which estimates that up to 300 million full-time jobs worldwide could be automated by 2030. Ethan Mollick, co-director of the Generative AI Lab at the University of Pennsylvania, warns that over-reliance on AI could erode critical thinking skills among young workers. Similarly, MIT Media Lab researchers have observed diminished brain activity during AI-assisted writing tasks.

The cognitive and ethical trade-off

Social scientists and ethicists raise alarms about the phenomenon of “cognitive offloading”—outsourcing thinking to machines. Harari posits that if AI generates persuasive content at scale, it may “hack the operating system of society” by manipulating language, thought, and social narratives. The concern: humans may become passive participants in algorithmically mediated systems, relegated to the role of data processors in a machine-driven ecosystem.

These anxieties are not confined to ivory towers. A 2025 global survey by the University of Melbourne and KPMG covering nearly 50,000 respondents across 47 countries, including India, found that 54% of people were sceptical about the societal impact of AI systems. Trust was significantly lower in advanced economies (39%) compared to emerging ones (57%). One in five users said that AI reduced real human interaction and collaboration in workplaces—raising concerns about emotional and organisational cohesion in AI-augmented environments.

Declining trust and regulatory imperative

Trust in AI is visibly eroding. The same Melbourne-KPMG survey showed that from 2022 to 2024, the belief that AI’s benefits outweigh its risks fell from 50% to 41% globally. The sharpest declines were seen in Brazil (from 71% to 44%) and India (from 72% to 55%). This shift corresponds with growing exposure to Generative AI and a clearer understanding of its flaws—biases in training data, hallucinations, and opaque decision-making processes.

Crucially, 70% of respondents support stronger regulation to ensure AI systems operate ethically, safely, and transparently. Calls are rising for policies that define usage boundaries, ensure algorithmic accountability, and institute human-in-the-loop mechanisms for high-stakes applications in sectors such as healthcare, education, law enforcement, and public governance.

The paradox is now evident: AI is both a catalyst and a conundrum. Its potential to augment human productivity and reshape entire sectors is enormous. But its unregulated spread also carries the risk of social disruption, mass displacement, and diminished human agency.

The imperative is clear. Governments and corporations must work toward a robust framework of AI governance—anchored in transparency, fairness, and human rights. Ethical AI development must be supported by strong institutions, interdisciplinary research, and public discourse. Above all, the goal must be to ensure that AI remains a force for good—enhancing human potential rather than replacing it.

Archana Datta is a former director-general, Doordarshan and All India Radio.